Study design

This was a mixed methods intervention study using a concurrent nested/embedded/convergent design conducted in Kenya and Nigeria in May and June 2023. This was designed to evaluate the feasibility of the midwifery educator CPD programme. The goal was to obtain different but complementary data to better understand the CPD programme with the data collected from the same participants or similar target populations [37].

The quantitative component of the evaluation used a quasi-experimental pre-post and post-test only designs to evaluate the effectiveness of the blended CPD programme intervention among midwifery educators from mid-level training colleges and universities from the two countries. Pre and post evaluation of knowledge (online self-directed component) and skills (developing a teaching plan during the face-to-face component) was performed. Post intervention evaluation on programme satisfaction, relevance of CPD programme and microteaching sessions for educators was conducted.

The qualitative component of the evaluation included open-ended written responses from the midwifery educators and master trainers to describe what worked well (enablers), challenges/barriers experienced in the blended programme and key recommendations for improvement were collected. In addition, key informant interviews with the key stakeholders (nursing and midwifery councils and the national heads of training institutions) were conducted. Data on challenges anticipated in the scale up of the programme and measures to promote sustainability, access and uptake of the programme were collected from both educators and key stakeholders.

A mixed methods design was used for its strengths in (i) collecting the two types of data (quantitative and qualitative) simultaneously, during a single data collection phase, (ii) provided the study with the advantages of both quantitative and qualitative data and (iii) helped gain perspectives and contextual experiences from the different types of data or from different levels (educators, master trainers, heads of training institutions and nursing and midwifery councils) within the study [38, 39].

Setting

The study was conducted in Kenya and Nigeria. Kenya has over 121 mid-level training colleges and universities offering nursing and midwifery training while Nigeria has about 300. Due to the vastness in Nigeria, representative government-owned nursing and midwifery training institutions were randomly selected from each of the six geo-political zones in the country and the Federal Capital Territory. Mid-level training colleges offer the integrated nursing and midwifery training at diploma level while universities offer integrated nursing and midwifery training at bachelor/master degree level in the two countries (three universities in Kenya offer midwifery training at bachelor level). All nurse-midwives and midwives trained at both levels are expected to possess ICM competencies to care for the woman and newborn. Midwifery educators in Kenya and Nigeria are required to have at least advanced diploma qualifications although years of clinical experience are not specified.

It is a mandatory requirement of the Nursing and Midwifery Councils for nurse/midwives and midwifery educators in both countries to demonstrate evidence of CPD for renewal of practising license in both countries [40, 41]. A minimum of 20 CPD points (equivalent to 20 credit hours) is recommended annually for Kenya and 60 credit hours for Nigeria every three years. However, there are no specific midwifery educator CPD that incorporated both face-to-face and online modes of delivery, available for Kenya and Nigeria and indeed for many countries in the region. Nursing and midwifery educators are registered and licensed to practice nursing and midwifery while those from other disciplines who teach in the midwifery programme are qualified in the content they teach.

Study sites

In Kenya, a set of two mid-level colleges (Nairobi and Kakamega Kenya Medical Training Colleges (KMTCs) and two universities (Nairobi and Moi Universities), based on the geographical distribution of the training institutions were identified as CPD Centres of Excellence (COEs)/hubs. In Nigeria, two midwifery schools (Centre of Excellence for Midwifery and Medical Education, College of Nursing and Midwifery, Illorin, Kwara State and Centre of Excellence for Midwifery and Medical Education, School of Nursing Gwagwalada, Abuja, FCT) were identified. These centres were equipped with teaching and EmONC training equipment for the practical components of the CPD programme. The centres were selected based on the availability of spacious training labs/classes specific for skills training and storage of equipment and an emergency obstetrics and newborn care (EmONC) master trainer among the educators in the institution. They were designated as host centres for the capacity strengthening of educators in EmONC and teaching skills.

Intervention

Nursing and midwifery educators accessed and completed 20 h of free, self-directed online modules on the WCEA portal and face-to-face practical sessions in the CPD centres of excellence.

The design of the midwifery educator CPD programme

The design of the CPD modules was informed by the existing gap for professional development for midwifery educators in Kenya and other LMICs and the need for regular updates in knowledge and skills competencies in delivery of teaching [9, 15, 23, 28]. Liverpool School of Tropical Medicine led the overall design of the nursing and midwifery educator CPD programme (see Fig. 1 for summarised steps taken in the design of the blended programme).

This was a two-part blended programme with a 20-hour self-directed online learning component (accessible through the WCEA platform at no cost) and a 3-day face-to-face component designed to cover theoretical and practical skills components respectively. The 20-hour self-directed online component had four 5-hour modules on reflection practice, teaching/learning theories and methods, student assessments and effective feedback and mentoring. These modules had pretest and post-test questions and were interactive with short videos, short quizzes within modules, links for further directed reading and resources to promote active learning. This online component is also available on the WCEA platform as a resource for other nurses and midwifery educators across the globe ( ).

Practical aspects of competency-based teaching pedagogy, clinical teaching skills including selected EmONC skills, giving effective feedback, applying digital innovations in teaching and learning for educators and critical thinking and appraisal were delivered through a 3-day residential face-to-face component in designated CPD centres of excellence. Specific skills included: planning and preparing teaching sessions (lesson plans), teaching practical skills methodologies (lecture, simulation, scenario and role plays), selected EmONC skills, managing teaching and learning sessions, assessing students, providing effective feedback and mentoring and use of online applications such as Mentimeter and Kahoot in formative classroom assessment of learning. Selected EmONC skills delivered were shoulder dystocia, breech delivery, assisted vaginal delivery (vacuum assisted birth), managing hypovolemic shock and pre-eclampsia/eclampsia and newborn resuscitation. These were designed to reinforce the competencies of educators in using contemporary teaching pedagogies. The goal was to combine theory and practical aspects of effective teaching as well as provide high quality, evidence-based learning environment and support for students in midwifery education [4]. These modules integrated the ICM essential competencies for midwifery practice to provide a high quality, evidence-based learning environment for midwifery students. The pre and post tests form part of the CPD programme as a standard assessment of the educators.

As part of the design, this programme was piloted among 60 midwifery educators and regulators from 16 countries across Africa at the UNFPA funded Alliance to Improve Midwifery Education (AIME) Africa regional workshop in Nairobi in November 2022. They accessed and completed the self-directed online modules on the WCEA platform, participated in selected practical sessions, self-evaluated the programme and provided useful feedback for strengthening the modules.

The Nursing and Midwifery Councils of Kenya and Nigeria host the online CPD courses from individual or organisation entities on the WCEA portal. In addition, the Nursing Council of Kenya provides opportunities for self-reporting for various CPD events including accredited online CPD activities/programmes, skill development workshops, attending conferences and seminars, in-service short courses, practice-based research projects (as learner, principal investigator, principal author, or co-author) among others. In Nigeria, a certificate of attendance for Mandatory Continuing Professional Development Programme (MCPDP) is required as evidence for CPD during license renewal. However, the accredited CPD programmes specific for midwifery educators are not available in both countries and Africa region [15, 42].

Midwifery educator CPD programme design stages

Participants and sample size

Bowen and colleagues suggest that many feasibility studies are designed to test an intervention in a limited way and such tests may be conducted in a convenience sample, with intermediate rather than final outcomes, with shorter follow-up periods, or with limited statistical power [34].

A convenience random sample across the two countries was used. Sample size calculations were performed using the formula for estimation of a proportion: a 95% confidence interval for estimation of a proportion can be estimated using the formula: (ppm 1.96sqrt{frac{text{p}(1-text{p})}{n}}) The margin of error (d) is the second term in the equation. For calculation of the percentage change in competence detectable Stata’s power paired proportion function was used.

To achieve the desired level of low margin of error of 5% and a 90% power (value of proportion) to detect competence change after the training, a sample of 120 participants was required. Using the same sample to assess competence before and after training, so that the improvement in percentage competent can be derived and 2.5% are assessed as competent prior to training but not after training (regress), a 90% power would give a 12% improvement change in competence after the training.

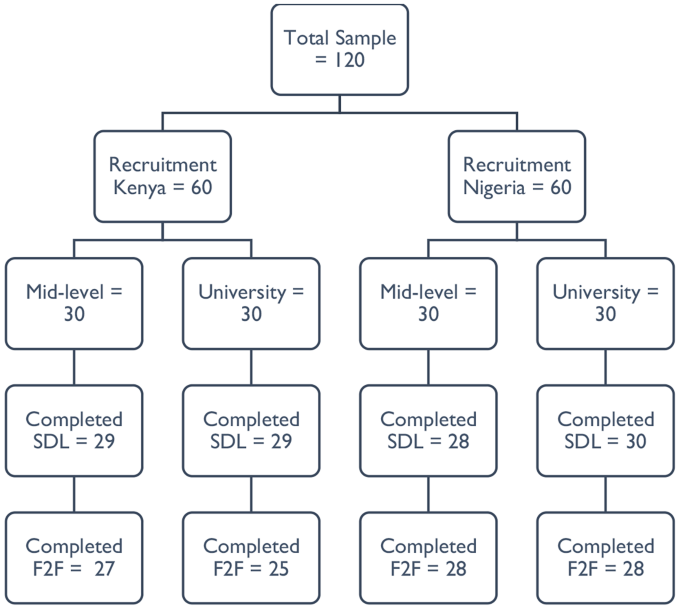

A random sample of 120 educators (60 each from Kenya & Nigeria; 30 each from mid-level training colleges and universities) were invited to participate via an email invitation in the two components of the CPD programme (Fig. 2). Importantly, only participants who completed the self-directed online modules were eligible to progress to the face-to-face practical component.

Flow of participants in the CPD programme (SDL = self-directed online learning; F2F = face-to-face practical)

For qualitative interviews, eight key informant interviews were planned with a representative each from the Nursing and Midwifery Councils, mid-level training institutions’ management, university and midwifery associations in both countries. Interviews obtained data related to challenges anticipated in the scale up of the programme and measures to promote sustainability, access and uptake of the programme.

Participant recruitment

Only nursing and midwifery educators registered and licensed by the Nursing and Midwifery Councils were eligible and participated. This was because they can access the WCEA website with the self-directed online programme via the Nursing and Midwifery Councils’ websites, only accessible to registered and licensed nurses and midwives.

The recruitment process was facilitated through the central college management headquarters (for mid-level training colleges’ educators) and Nursing and Midwifery Councils (for university participants). Training institutions’ heads of nursing and midwifery departments were requested to share the contact details of all educators teaching midwifery modules, particularly the antepartum, intrapartum, postpartum and newborn care modules in the two countries. A list of 166 midwifery educators from 81 universities and mid-level training colleges was obtained through the Heads of the Department in the institutions.

The research lead, with the assistance by the co-investigator from Nigeria then randomly sampled 120 educators based on institution type and region for representativeness across the countries. Following the selection of participants, the two investigators shared the electronic detailed participant study information sheet and consent form to the potential participants one week before the start of the self-directed online modules. Clear guidance and emphasis on the conduct of the two-part program including completing the mandatory four self-directed online modules was provided. Due to the large number of eligible participants, the recruitment and consenting process was closed after reaching the first 30 participants consenting per institution type and region, with 1–2 educators per institution randomly recruited. This allowed as many institutions to be represented across the country as possible. Participants received a study information sheet and an auto-generated copy of the electronic consent form completed in their emails. Other opportunities for participating in the two-part programme were provided as appropriate for those who missed out. Only those who completed the four online modules were invited for the practical component. A WhatsApp community group for the recruited participants was formed for clarifications about the study, troubleshooting on challenges with online access and completion of the modules before and during the programme.

Self-directed online component

Upon consenting, the contact details of the educators from each level were shared with WCEA program director for generation of a unique identification code to access the self-directed online modules on the WCEA portal. Educators completed their baseline characteristics (demographic and academic) in the online platform just before the modules. Each self-directed online module was estimated to be completed in five hours. Only after completing a module was the participant allowed to progress to the next module. The modules were available for participants to complete at their own time/schedule. An autogenerated certificate of completion with the participant’s post-completion score was awarded as evidence of completing a module. Participants completed a set of 20 similar pretest and posttest multiple choice questions in each module for knowledge check. A dedicated staff from WCEA actively provided technical support for educators to register, access and complete the online modules. At the end of each module, participants completed a self-evaluation on a 5-point Likert scale for satisfaction (0 = very unsatisfied, 1 = unsatisfied, 2 = neutral, 3 = satisfied and 4 = very satisfied) and relevance of the modules (0 = very irrelevant, 1 = irrelevant, 2 = neutral, 3 = relevant and 4 = very relevant). This provided participants’ reactions to the different components of the modules on whether they met the individual educator’s development needs. In addition, participants responded to the open-ended questions at the end of the modules. These were on what they liked about the modules, challenges encountered in completing the modules and suggestions for improvement of the modules. A maximum period of two weeks was given for educators to complete the modules before progressing to the practical component.

Practical component

The practical component was delivered by a pool of 18 master trainers who received a 1-day orientation from the research lead before the training. The master trainers were a blend of experienced midwifery and obstetrics faculty in teaching and clinical practice actively engaged in facilitating EmONC trainings selected from Kenya and Nigeria. Four of these master trainers from Kenya participated in the delivery of both sets of trainings in Kenya and Nigeria.

Only educator participants who completed the self-directed online modules and certified were invited to participate in a 3-day residential practical component. Two separate classes were trained (mid-level and university level educators) per country by the same group of eight master trainers. The sessions were delivered through short interactive lectures; small group and plenary discussions; skills demonstrations/simulations and scenario teaching in small breakout groups; role plays and debrief sessions. Sessions on digital innovations in teaching and learning were live practical sessions with every participant using own laptop. Nursing and Midwifery Councils representatives and training institutions’ managers were invited to participate in both components of the programme.

Participant costs for participating in the two-part CPD programme were fully sponsored by the study. These were internet data for completing the self-directed online component and residential costs – transport, accommodation, and meals during the practical component.

Data collection

Self-directed online knowledge pretests and post-tests results, self-rated measures of satisfaction and relevance of the modules including what they liked about the modules, challenges encountered in accessing and completing the modules and suggestions for improvement data was extracted from the WCEA platform in Microsoft Excel.

On day 1 of the practical component, participants using their personal computers developed a teaching plan. On the last day (day 3), participants prepared a teaching plan and powerpoint presentation for the microteaching sessions. No teaching plan template from the trainers was provided to the participants before the training. However, they used formats from their institutions if available. A standard teaching plan template was provided at the end of the training.

The group of master trainers and participants were divided into groups for the microteaching sessions which formed part of the formative assessment. Each participant delivered a powerpoint presentation on a topic of interest (covered in the teaching plan) to the small group of 13–15 participants. This was followed by a structured session of constructive feedback that started with a self-reflection and assessment. This was followed by peer supportive and constructive feedback from the audience participants and faculty/master trainers identifying areas of effective practice and opportunities for further development. Each microteaching session lasted 10–15 min. Each of the microteaching session presentation and teaching plan were evaluated against a pre-determined electronic checklist by two designated faculty members independently during/immediately after the microteaching session. The checklist was adapted from LSTM’s microteaching assessment of the United Kingdom’s Higher Education Academy (HEA)’s Leading in Global Health Teaching (LIGHT) programme. The evaluation included preparing a teaching plan, managing a teaching and learning session using multiple interactive activities, designing and conducting formative assessments for learning using digital/online platforms, and giving effective feedback and critical appraisal. The master trainers received an orientation training on the scoring checklist by the lead researcher/corresponding author.

Self-rated confidence in different teaching pedagogy skills were evaluated before (on day 1) and after (day 3) the training on a 5-point Likert scale (0 = not at all confident, 1 = slightly confident, 2 = somewhat confident, 3 = quite confident and 4 = very confident). A satisfaction and relevance of practical component evaluation on a 5-point Likert scale was completed by the participants on an online designed form on day 3 after the microteaching sessions of the practical component. This form also had a similar qualitative survey with open-ended questions on what they liked about the practical component, challenges encountered in completing the practical component and suggestions for improvement of the component.

Using a semi-structured interview guide, six qualitative key informant interviews, each lasting about 30–45 min, were conducted by the lead researcher with the Nursing and Midwifery Councils focal persons and training institutions’ managers. These were audio recorded in English, anonymized, and deleted after transcription. These interviews were aimed at getting their perspectives on the programme design, anticipated barriers/enablers with the CPD programme and strategies for promoting uptake of the CPD programme. These interviews were considered adequate due to their information power (indicating that the more information the sample holds, relevant for the actual study, the lower amount of participants is needed) [43] and upon obtaining data saturation, considered the cornerstone of rigor in qualitative research [44, 45].

Assessment of outcomes

Participants’ reaction to the programme (satisfaction and relevance) (Kirkpatrick level 1) was tested using the self-rated 5-point Likert scales. Change in knowledge, confidence and skills (Kirkpatrick level 2) was tested as follows: knowledge through 20 pretest and post-test multiple choice questions per module in the self-directed online modules; confidence in applying different pedagogy skills through the self-rated 5-point Likert scale; and teaching skills through the observed microteaching sessions using a checklist.

Reliability and validity of the data collection tools

The internal consistency (a measure of the reliability, generalizability or reproducibility of a test) of the Likert scales/tools assessing the relevance of the online and practical modules and satisfaction of educators with the two blended modules were tested using the Cronbach’s alpha statistic. The Cronbach’s alpha statistics for the four Likert scales/tools ranged from 0.835 to 0.928, all indicating acceptably good to excellent level of reliability [46]. Validity (which refers to the accuracy of a measure) of the Likert scales were tested using the Pearson correlation coefficient statistic. Obtained correlation values were compared to the critical values and p-values reported at 95% confidence intervals. All the scales were valid with obtained Pearson correlation coefficients reported − 0.1946, which were all greater than the critical values (p < 0.001) [46]. The semi-structured interview guides for the qualitative interviews with the training institutions’ managers and midwifery councils (regulators) were developed and reviewed by expert study team members with experience in qualitative research.

Data management and analysis

Data from the online/electronic tools was extracted in Microsoft Excel and exported to SPSS version 28 for cleaning and analysis. Normality of data was tested using the Kolmogorov-Smirnov test suitable for samples above 50. Proportions of educator characteristics in the two countries were calculated. Differences between the educator characteristics in the two countries were tested using chi-square tests (and Fishers-exact test for cells with counts of less than 5).

For self-rated relevance of CPD programme components and satisfaction with the programme on the 0–4 Likert scales, descriptive statistics were calculated (median scores and proportions). Results are presented as bar graphs and tables. Cronbach alpha and Pearson correlation coefficients were used to test the reliability and validity of the test items respectively.

Change in knowledge in online modules, confidence in pedagogy skills and preparing teaching plans among educators was assessed by comparing pre-training scores and post-training scores. Descriptive statistics are reported based on normality of data. Differences in the scores were analysed using the Wilcoxon signed ranks tests, a non-parametric equivalent of the paired t-test. Differences between educators scores in microteaching by country and institution type were performed by Mann-Whitney U test. Level of competence demonstrated in the teaching plan and microteaching skill was defined as the percentage of the desired characteristics present in the teaching plan and microteaching session, set at 75% and above. The proportion of participants that achieved the desired level of competence in their teaching plan and microteaching skill was calculated. Binary logistic regression models were used to assess for the strengths of associations between individual educator and institutional characteristics (age, gender, qualifications, length of time as educator, training institution and country) and the overall dichotomised competent score (proportion achieved competence in teaching plan and microteaching skills). P-values less than 0.05 at 95% confidence interval were considered statistically significant.

Preparation for qualitative data analysis involved a rigorous process of transcription of recorded interviews with key informants. In addition, online free text responses by midwifery educators on what worked well, challenges encountered, and recommendations were extracted in Microsoft Excel format and exported to Microsoft Word for data reduction (coding) and theme development. Qualitative data was analysed using thematic framework analysis by Braun and Clarke (2006) as it provides clear steps to follow, is flexible and uses a very structured process and enables transparency and team working [47]. Due to the small number of transcripts, computer assisted coding in Microsoft Word using the margin and comments tool were used. The six steps by Braun and Clarke in thematic analysis were conducted: (i) familiarising oneself with the data through transcription and reading transcripts, looking for recurring issues/inconsistencies and, identifying possible categories and sub-categories of data; (ii) generating initial codes – both deductive (using topic guides/research questions) and inductive coding (recurrent views, phrases, patterns from the data) was conducted for transparency; (iii) searching for themes by collating initial codes into potential sub-themes/themes; (iv) reviewing themes by generating a thematic map (code book) of the analysis; (v) defining and naming themes (ongoing analysis to refine the specifics of each sub-theme/theme, and the overall story the analysis tells); and (vi) writing findings/producing a report. Confidentiality was maintained by using pseudonyms for participant identification in the study. Trustworthiness was achieved by (i) respondent validation/check during the interviews for accurate data interpretation; (ii) using a criterion for thematic analysis; (iii) returning to the data repeatedly to check for accuracy in interpretation; (iv) quality checks and discussions with the study team with expertise in mixed methods research [39, 47].

Integration of findings used the parallel-databases variant and are synthesised in the discussion section. In this common approach, two parallel strands of data are collected and analysed independently and are only brought together during interpretation. The two sets of independent results are then synthesized or compared during the discussion [39].